Microsoft works with what is called SLM, the Small Language Model. Which reveals information.

“Small Language Models”

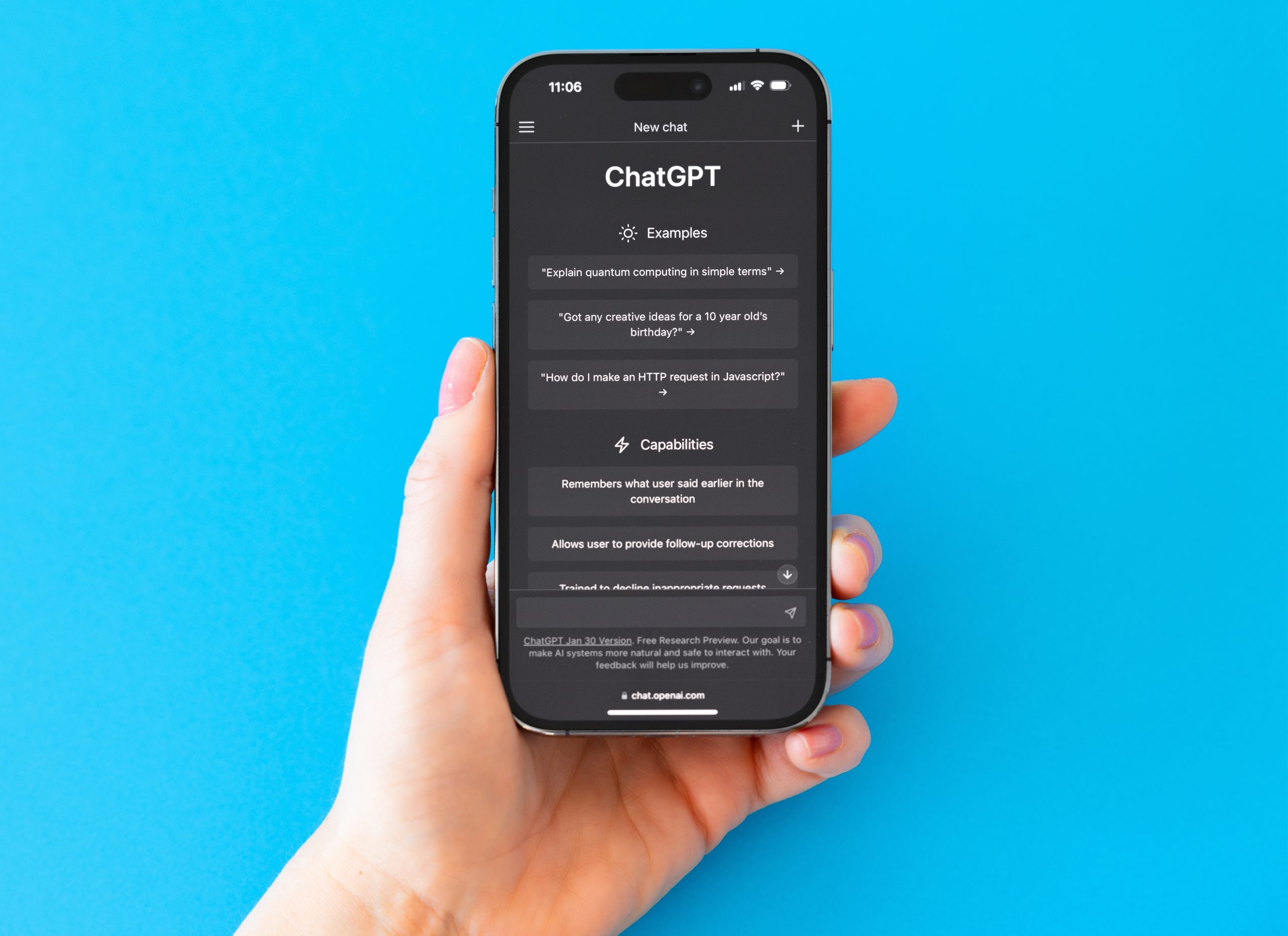

The goal is to be able to run AI on weaker hardware, which makes us think of a case from last December regarding Apple's plan to enable LLMs also on older iPhones:

“An Apple developer research document, shared with anyone who wants to read the technical details, suggests that Apple has solved the challenges of local processing of AI queries in the Pocket, but there's more. The good news is that the company's researchers have found a way to render LLM models natively as well on iPhones work effectively on older models, too. How did Apple solve this? By using the storage chips on iPhones, which are typically 128GB and above, in stark contrast to many iPhones that have 4GB and perhaps 6GB of RAM.

Could this be a big advantage for Apple? The company has been quiet about Assistant LLMs, but if they come up with a significantly upgraded Siri that can also run on older iPhones, that could be the big feature they're looking for.

Copilot Pro has been launched

Now, of course, the big question is whether Microsoft has achieved something similar for Android mobiles. There's nothing official from Microsoft yet, but information reveals that the company has a “GenAI” team working on a smaller language model that doesn't require a lot of resources. The same team is supposed to be integrated with Microsoft's Azure division and use, among other things, OpenAI's LLM technology, of which the company is a major shareholder.

Whatever the exact plans for SLM, Microsoft is continuing its AI offensive plan and earlier this month introduced Copilo Pro baked into Microsoft 365:

“Web specialist. Lifelong zombie maven. Coffee ninja. Hipster-friendly analyst.”