Popular Science: If cars are to become self-driving, machines must learn from the human brain.

Time and space are fundamental to the existence of the universe, and human intelligence is our tool for navigating time and space in an appropriate manner. Our ability to see the future is critical.

In which direction does the tree fall?

Through evolution, the human brain has evolved into a tool that perceives not only time, place, and things, but our neural network also predicts what will happen in the near future. What kind of path will the stone that you throw take? In which direction does the tree fall? How can you avoid being bitten by a worm in front of you? Where is the kid walking along the road about to move?

In some forms of artificial intelligence (AI) we face similar questions. If we could develop algorithms that handle space and time and predict the near future in a good way, society would change dramatically. Algorithms used in self-driving cars are one example – just think how self-driving cars will change society!

The hard recipe: visualize, predict, and act

The development of self-driving cars is about making machines that operate according to their predictions about the future in an environment where space and time are essential. When AI is behind the wheel, it’s not just important that it follow traffic rules. It’s also important that she uses her cameras to see and understand what’s up close (Knowledge); It can predict the movement of other cars, the movements of cyclists and the movements of people (prediction); and that he can act in an appropriate manner (a job).

Understanding the traffic picture, predicting the movement of other things and not least taking the right actions on your own is a very difficult problem and requires a complex form of intelligence.

What does artificial intelligence look like?

Tesla is the company that has come far behind when it comes to developing KI algorithms for self-driving cars. They just arranged an “AI Day” (Artificial Intelligence Day, editor’s note), where they explained how the algorithms work.

Tesla also demonstrated its new computer chips and supercomputers to once again be able to develop algorithms for future self-driving. In time, the first slide in Tesla’s presentation was a familiar representation of the brain and how the brain’s sense of sight is built. In other words, the brain and visual system are an inspiration when Elon Musk and his crew develop their algorithms.

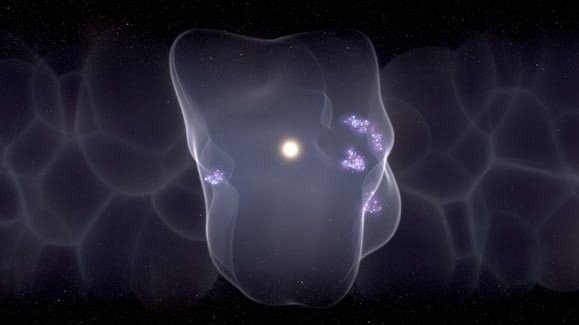

What’s most exciting to me about the new methods Tesla uses is that all predictions are made in what’s called a vector space, which in many ways is the “mental model” of the algorithm. Future predictions are not made directly on the photos or the movie. Movies from car cameras were first translated into a consistent, simplified version of reality, just as the brain does. Here, cars, people, cyclists and other things exist as simplified objects in the “mental model” of a machine, where each object has its assigned coordinates in time and space.

New: time and space are treated together

Another exciting development is that time is now handled more realistically in Tesla’s algorithms. This is in contrast to previous versions of Tesla’s algorithms, where camera film was treated as single images, meaning that reality often did not become coherent over time.

For example, a car that disappeared for a moment behind a larger car will disappear from the Tesla mental model. With the technologies that Tesla uses now, time and space are handled together. As humans, we know that even if our vision is obstructed, the car will not suddenly disappear. The car continues to exist somewhere in reality and in our mental model. This is now the case in many ways as well for Tesla’s algorithms.

Norwegian artificial intelligence?

The Tesla development team took inspiration from the brain for its algorithms and how the brain encodes time, space, and objects. On this subject, Norway is considered a stronghold of knowledge.

Edward and May-Britt Moser are among those who have done the world’s most research on how the brain perceives space and time. In 2014, they were awarded the Nobel Prize with John O’Keefe “for their discoveries of the cells that make up GPS in the brain.” In their laboratory in Trondheim, Kavli Institute of NeuroscienceCountless students have been taught in the same field. Can this knowledge now be used to inspire and produce “Norwegian AI”?

Bio-inspired artificial intelligence

It looks that way. Several Norwegian research groups, among others Center for Integrative Neuroplasticity (SINEPLA) at the University of Oslo and live technology lab At OsloMet, he recently began researching the interaction between neuroscience and artificial intelligence. This field is called bio-inspired artificial intelligence and it is growing strongly internationally.

What is more natural when the Norwegian Artificial Intelligence Research Consortium (Nura) arrange one Nordic Conference on Artificial Intelligence For young researchers, the inaugural lecture is given by brain researcher Edward Moser entitled “Space, Time, and Memory in Neural Networks of the Brain”? I hope the lecture will inspire future researchers in the field of artificial intelligence, and that these two Norwegian fields, neuroscience and artificial intelligence, will be linked more closely.

Read also:

Research area is forskning.no discussion and popular science site

“Explorer. Unapologetic entrepreneur. Alcohol fanatic. Certified writer. Wannabe tv evangelist. Twitter fanatic. Student. Web scholar. Travel buff.”