It is no longer news that robots perform work tasks on the ground. The use of underwater robots for industrial and research purposes has clear advantages. But the 3D camera with which the robot “sees” works worse under water than in air.

On Earth, we find 3D cameras everywhere: mobile phones, industry, self-driving cars, etc. But the light passing through water is a bit like light in fog. If you turn on a car's headlights, you won't see anything better, but the fog will blind you, says lead researcher Jens T. Thielemann at Scientif.

Water is full of molecules that diffuse and focus light, in the same way that the tiny water droplets that make up fog do.

Focus on the depth of the image

Thielemann recently defended his doctoral thesis at the Department of Computer Science at the University of Oslo, on the use of 3D cameras underwater.

We started from how light behaves in water over time, and how it behaves in water in space. He says two different projects developed two different technologies, with the technologies complementing each other.

– One thing we did that was new was to focus on image depth, unlike others, which tend to just improve image density.

In the first project, the camera emits a short pulse of light toward the object the robot wants to see. The light beam will first hit the “fog”, and parts of the beam will be scattered or returned. But the beam continues until it hits the object and then sends a signal again.

A more logical solution

– We used a very fast laser and an image chip that together tried to move forward: Here there is fog, fog here, fog, fog… Oh, the object has come. By trying to move forward in this way, we gained in-depth information.

This is what Thielemann means by the behavior of light in time. Instead of recording everything that comes between the camera and the object, the camera must only read the signal it receives from the object.

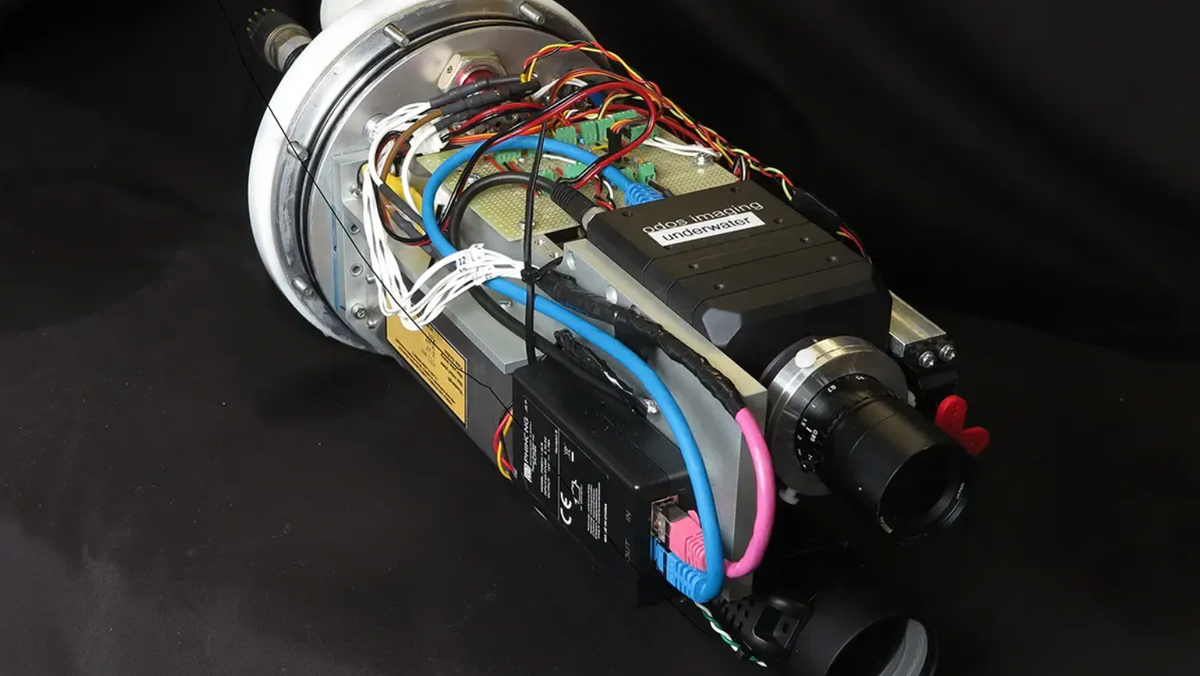

This technology has been known for a long time, but it requires very complex and expensive components. The other new thing we did was to use the same semiconductor technology used in regular cameras instead. We can start partly with off-the-shelf components, making the solution accessible to everyone, explains Thielmann.

More advanced technology from Earth

By exploiting the properties of light at the right time, they were able to obtain the image with an accuracy of about one centimeter.

In order to be able to measure depth with millimeter precision, it was necessary to take as a starting point how light behaves in the room.

– To the nearest metre, we humans collect information from our eyes to find depth to objects around us. In the same way, we can build a camera that uses information from two different angles.

To achieve this underwater, Thielmann and his colleagues developed another technique used on land and built a camera based on it.

– Instead of two cameras acting as “eyes,” we replace one of them with a projector that emits a controlled light pattern onto the stage. He explains that it is not just one pattern, but many different patterns that together form a well-defined symbol.

The technologies complement each other

– There are many possible codes that can be sent. Again, the problem lies in the molecules in the water, which are where all the codes get stuck. But by understanding how particles affect light's journey through water, we found the less disruptive codes. This is how we are able to get results 2 times better than traditional methods. In other words: we can maintain accuracy by about half of good water quality.

This technology has an accuracy of up to one millimeter, but is weaker at longer distances. This means that the two technologies complement each other, explains Thielmann.

The first works best at distances between one and seven to eight metres, and the second between a few tens of centimeters and one and a half metres. One shows the robot where it is installed underwater, and the other allows the robot to hold it and makes it possible to check that it is not damaged.

The article was first published on Titan.uio.no

Read also

Look for lithium from the sky

“Web specialist. Lifelong zombie maven. Coffee ninja. Hipster-friendly analyst.”